Postgres Take it All

Overview

PostgreSQL is becoming a catch-all solution for simple scenario, and this is a trend, not an accident. A new article full of evidence enforced the ideas I wrote down in the 2019 about Kafka vs PostgreSQL: lets dig into it.

In the last 15 years in the IT are born very nice solution to address ‘facebook effect’ challenge. Some example to explain what I mean.

Back in 2009…

Friends

When you have a graph of friends of friends, a relational database can have an hard day extracting all the information you need. If your database has 1 billion people as records (like Facebook used to) your problem seems intractable. NoSQL database are born.

We are the dot in dot….bubble

You have a big shop like Ikea or Amazon: you sell heterogeneous stuff from plastic bags to reindeer meatballs, passing by desks and chairs. On these scenarios a big t_product table is challenging (Oracle per-table column limit is 1000). For sure every of these things have a price. You can meta-describe it but believe me, you are entering in the meta-dimension madness: for instance Reddit chosed to have just two tables to model everything, a very bold (and crazy) move but okey…we was in 2012…

During this phase Mongo DB emerged.

The castle of Logs around 2010

If you have a social application (Linkedin) you end up managing a lot of streamed data (from cellphones, website, advertisement systems, etc) you need to process in very different way. Also a big trading system has similar need. Sometime you want to re-play the data to reprocess them on a different angle. Kafka emerged around 2011.

In this time frame databases was just databases, and Oracle was the only one providing additional feature on top of them, with high performance (and high costs).

PostgreSQL was known to be painful slow but less bugged than 10 years before.

Fast forward on 2025

Let me address the challenge from another point of view.

You can rent on AWS a 64 CPU+128GB RAM for 14304€ per year. This machine has the same CPU and double the memory of Sun E10000. Fineco used 3 of them in 2000 to manage its trading on line platform. Now it costs less then a 15 day-vacation on Europe (or Japan).

PostgreSQL nowadays offer:

- Json management via native-type support. Schemaless is easy give it a try!

- Full text search support (with stemming included)

- Parallel select SQL

Cloud providers offer PostgreSQL installation with stronger performance enhancement too.

Why a dumb architecture is better than a bad architecture

Often I am called to set up new projects (which are 99% of time tech refresh of legacy one, for sure). These systems interact with other big systems.

When I ask for a volume estimation, things get incredible complex:

- Business stakeholders tend to be a bit optimistic on expected future volumes.

- Data not always are congruent.

On the last system I am working on, about 40% of input transactions was not valid (i.e. hits the limit or are malformed) and so the declared volume was 40% greater then the outcome sent to other systems (!).

On these scenarios, finding the correct sizing of the system upfront is challenging and often impossible.

On some projects, make little sense to propose MongoDB or Kafka without strong evidence (in the form of volume, scope, provisioning costs tradeoff, etc).

Things change…

During the first period of solution rollout, you must have a very fast and simple way of inspecting system status and fixing bugs (mostly small edge case business ask you to fix very fast).

I want to stress you than after first set of deployments, and if your business starts to become successful, things will change very fast and you need also a way to scale in a reliable way.

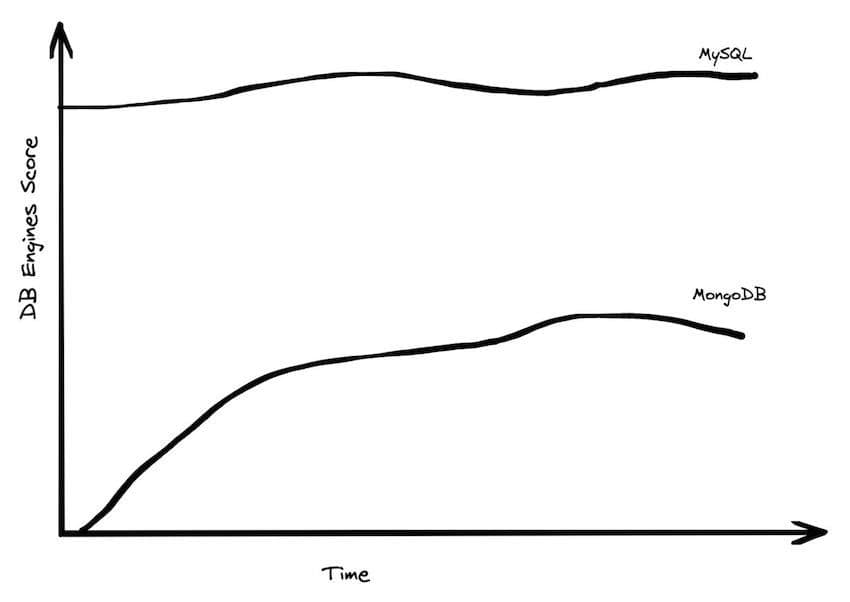

As an example first installation of Facebook just required 1000 USD rented machine and used MySQL.

Last but not least it seems BigData are quite dead, for reason not linked to the IT but to other forces (like legal risks of keeping too much data laying around). These facts bring up another weird situation: a fat MySql/MariaDB installation can often be on par on a MongoDB cluster, simply because you have too little data to justify MongoDB super-powers.

The complexity of managing a fault-tolerant Kafka installation is often underestimated: a replicated passive-active database is far more simple to set-up.

Self Hosted PostgreSQL 2025 (added on December 2025)

Once upon a time it seems reasonable to go on Managed database cloud instances. But now things seems flipped back. This article by Pierce Freeman suggests you can move from a cloud-managed to a self-hosted PostgreSQL instance improving your solution ’s flexibility and also your bill:

- Managed instance costs are a lot higher than the equivalent cloud Linux instance:

[On AWS] A db.r6g.xlarge instance (4 vCPUs, 32GB RAM) now costs $328/month before you add storage, backups, or multi-AZ deployment. For that price, you could rent a dedicated server with 32 cores and 256GB of RAM.

- It is becoming trivial to set up a live-instance and optimize it: look at Pierce Freeman’s project here.

- You can tune connections parameters and improve performance without throwing to it more RAM and vCPU (=even more cost efficient)

- Backups are easy to set up and monitor them require a tiny fraction of sysadmin time every month. Also setting up a cluster seems reasonable easy

The only difference on a managed instance IN THEORY, is that you can sleep at night because something/someone else will fix the trouble. But if you have a startup, your phone will ring anyway, bet on it.

Also, outrages on big cloud provider tend to create problem to multiple sites, exposing your business too. But if you are able to be on line when the vast majority is not, you can increase your revenue; for instance if Amazon.it is in trouble, then your tiny “Xmas Online Presents” shop can gain a momentum.

Conclusion and further reading

It become crucial to analyze your use case. Modern database like PostgreSQL (or Oracle) are ideal for exploring different architectural setup: you can implement small queues, structure key/value data in json column, do full text searches functionalities, and so on. This article can give you more insight on comparing MongoDB and PostgreSQL.

I was amazed on how it is simple to update the search index of a single document in SQLite compared to the index update of an ElasticSearch cluster: you need to recreate the index if you want to remove a wrong text: it is a simple task, but on SQLite you need to know nothing: it is just an update of the relevant row!

And yes SQLite too has minimal text indexing capabilities and english stemming :)

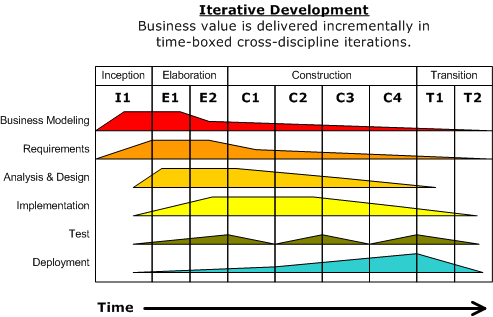

After you embrace the “PostrgreSQL take it all” mantra, you can evolve your infrastructure adding components like RabbitMQ, Kafka, ElasticSearch, Vector Databases, etc in a second phase (and believe be, could be in a far future called “NEVER” or “next guy on that job”).

Update Andy Pavlo, Associate Professor at Carnegie Mellon University’s Computer Science Department and database expert, wrote at beginnig of 2026:

The Dominance of PostgreSQL Continues I first wrote about how PostgreSQL was eating the database world in 2021. That trend continues unabated as most of the most interesting developments in the database world are happening once again with PostgreSQL. The DBMS’s latest version (v18) dropped in November 2025. The most prominent feature is the new asynchronous I/O storage subsystem, which will finally put PostgreSQL on the path to dropping its reliance on the OS page cache. […] […]why I am claiming that the hottest action in databases for 2025 happened with PostgreSQL?

The reason is that most of the database energy and activity is going into PostgreSQL companies, offerings, projects, and derivative systems.

The article is very long and detailed: if you have time, take a read.