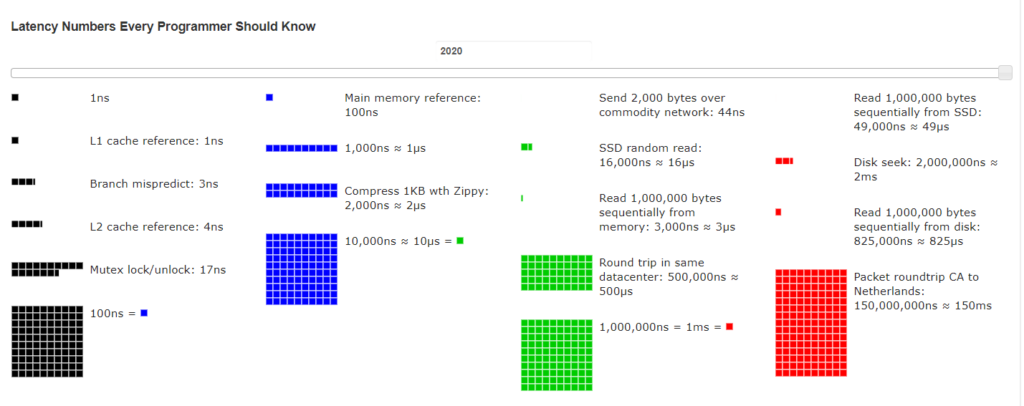

Latency numers every programmer should know

Modern computer are very very very fast. C/64 was about 1Mhz and you can make a pause just running a 10.000 cycle via a BASIC v2 FOR..NEXT loop.

Slowest computer today are clocked 3Ghz (3000Mhz!), multi core and super scalar: it means they can usually execute 2 or more instructions per core in parallel, and you get at least 2 cores on the tiny one (RasperryPi2 has 4 ARM cores, for instance). The slow Intel centrino has at least 2 ALU and on avarage can execute two instruction in parallel (if both are arithmetic).

So for example, if your code take 1+ seconds to process 50 record, you have a problem, a big problem. But to understand better the issue, lets start with this table (click to enlarge):

The C/64 was a simple architecture: the chip was so slow the RAM can cope with. It is sad to say but modern chip are way too fast for dynamic RAM.

So a main memory access, not cached, take as much as 100 nano seconds to complete. But even so, a mechanical (!) hard disk take no more than 2ms to do a disk seek.

Now measure the time your code takes to do a simple query, to return lets say 50 records.

In normal condition (no network call at all, no swapping etc) your operations take as much as 100 nanoseconds/each to occur, at worst. And in 1 ms there are one million of nano seconds. So if your code does 1 million of simple operation, it should stay around 1 ms.

If you cannot process 50 records in about, lets say, 50 ms, you are using a lot of processing power.

For instance, if you take 1 seconds to process 50 records, it means you use 20 ms per record, I mean 20 million random access memory per record! I mean really random access, always cache miss…because otherwise the chip cache will take far less time.

Sad conclusion

The world is complex but when my app is unable to produce a tiny excel with 4000 rows in less than a second, I always think the fault is on our code. And often it is.